Feature extraction, dimensionality reduction and manifold learning

DRR: Dimensionality Reduction via Regression

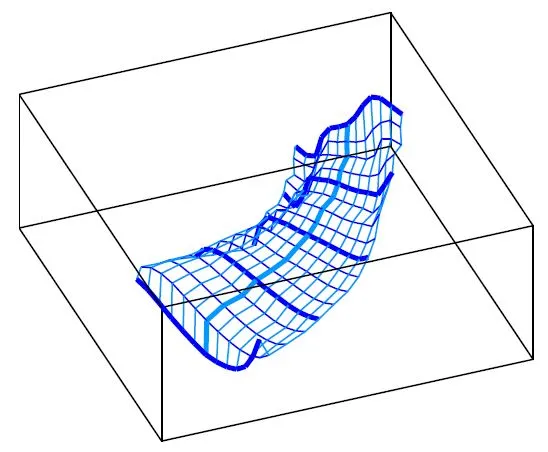

Dimensionality Reduction via Regression (DRR) is a manifold learning technique aimed at removing residual statistical dependence between PCA components due to dataset curvature. DRR predicts PCA coefficients from neighboring coefficients using multivariate regression, generalizing PPA. It advances dimensionality reduction methods by using curves instead of straight lines.

References

- Dimensionality reduction via regression in hyperspectral imagery. Laparra, V., Malo, J., and Camps-Valls, G. IEEE Journal on Selected Topics in Signal Processing, 9(6):1026-1036, 2015.

EPLS: Unsupervised Sparse Convolutional Neural Networks for Feature Extraction

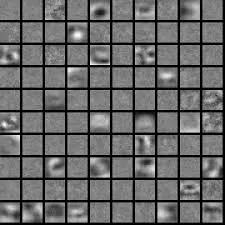

EPLS (Enhancing Population and Lifetime Sparsity) is an unsupervised feature learning algorithm designed for sparse representations in convolutional neural networks. It is meta-parameter free, simple, and fast.

References

- Unrolling loopy top-down semantic feedback in convolutional deep networks. Gatta, C., Romero, A., van de Weijer, J. Deep-vision workshop CVPR, 2014.

- Unsupervised Deep Feature Extraction Of Hyperspectral Images. Romero, A., Gatta, C., Camps-Valls, G. IEEE Workshop on Hyperspectral Image and Signal Processing, Whispers, 2014.

- Unsupervised Deep Feature Extraction for Remote Sensing Image Classification. Romero, A., Gatta, C., Camps-Valls, G. IEEE Transactions on Geoscience and Remote Sensing, 2015.

HOCCA: Higher Order Canonical Correlation Analysis

HOCCA is a linear manifold learning technique that applies to datasets from the same source. It finds independent components in each dataset that are related across datasets, thus combining the goals of ICA and CCA.

References

- Spatio-Chromatic Adaptation via Higher-Order Canonical Correlation Analysis of Natural Images. Gutmann, M.U., Laparra, V., Hyvärinen, A., Malo, J. PLoS ONE, 9(2):e86481, 2014.

KEMA: Kernel Manifold Alignment

KSNR: Kernel Signal to Noise Ratio

KSNR is a feature extraction method that maximizes signal variance while minimizing noise variance in a reproducing kernel Hilbert space (RKHS). It provides noise-free features for dimensionality reduction, outperforming kPCA in correlated noise scenarios.

References

- Learning with the kernel signal to noise ratio. Gomez-Chova, L., Camps-Valls, G. IEEE International Workshop on Machine Learning for Signal Processing, MLSP, 2012.

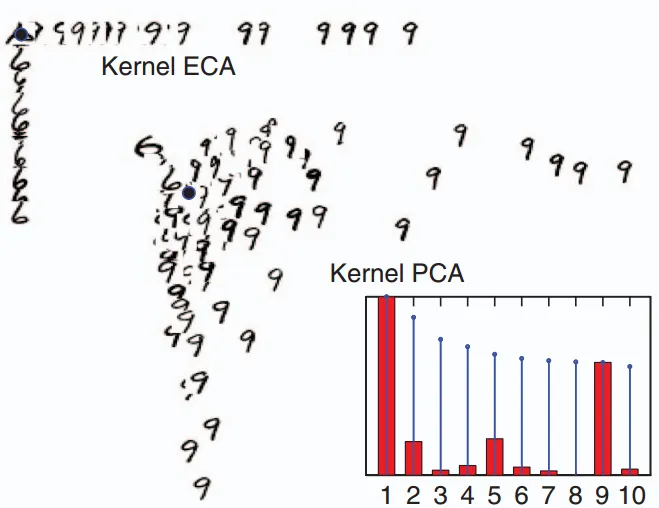

OKECA: Optimized Kernel Entropy Component Analysis

OKECA is a kernel feature extraction method based on entropy estimation in Hilbert spaces. It provides sparse and compact results, useful for data visualization and dimensionality reduction.

References

- Optimized Kernel Entropy Components. Izquierdo-Verdiguier, E., Laparra, V., Jenssen, R., Gómez-Chova, L., Camps-Valls, G. IEEE Transactions on Neural Networks and Learning Systems, 2016.

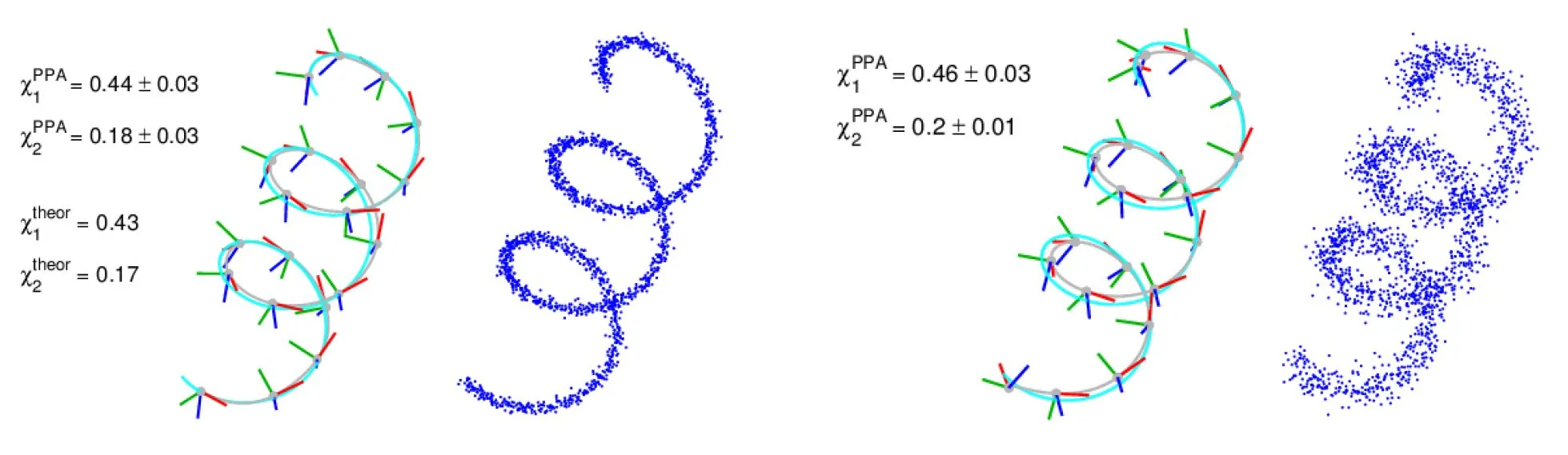

PPA: Principal Polynomial Analysis

Principal Polynomial Analysis (PPA) is a manifold learning technique that generalizes PCA by using principal polynomials to capture nonlinear data patterns. It improves PCA’s energy compaction ability, reducing dimensionality reduction errors. PPA defines a manifold-dependent metric that generalizes Mahalanobis distance for curved manifolds.

References

- Principal polynomial analysis. Laparra, V., Jiménez, S., Tuia, D., Camps-Valls, G., Malo, J. International Journal of Neural Systems, 24(7), 2014.

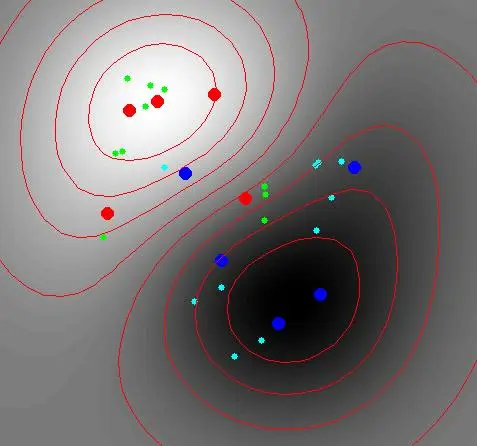

RBIG: Rotation-Based Iterative Gaussianization

RBIG is an invertible multivariate Gaussianization transform that uses univariate histogram Gaussianization and multivariate rotation. This method is useful for multivariate PDF estimation and associated applications.

References

- Iterative gaussianization: From ICA to random rotations. Laparra, V., Camps-Valls, G., Malo, J. IEEE Transactions on Neural Networks, 22(4):537-549, 2011.

- PCA Gaussianization for one-class remote sensing image classification. Laparra, V., Muñoz-Marí, J., Camps-Valls, G., Malo, J. Proceedings of SPIE, 7477, 2009.

- PCA Gaussianization for image processing. Laparra, V., Camps-Valls, G., Malo, J. Proceedings - International Conference on Image Processing, ICIP, 2009.

ROCK-PCA: Rotated Complex Kernel PCA for Nonlinear Spatio-Temporal Data Analysis

The rotated complex kernel PCA (ROCK-PCA) works in reproducing kernel Hilbert spaces to account for nonlinear processes, operates in the complex domain to handle both spatial and temporal features and time-lagged correlations. It adds an extra rotation for improved flexibility and physical consistency, providing an explicitly resolved spatio-temporal decomposition of Earth and climate data cubes.

References

- Nonlinear PCA for Spatio-Temporal Analysis of Earth Observation Data. Bueso, D., Piles, M., and Camps-Valls, G. IEEE Transactions on Geoscience and Remote Sensing, 58(8), 2020.

SIMFEAT: A Simple MATLAB(tm) Toolbox of Linear and Kernel Feature Extraction

SIMFEAT is a toolbox that includes linear and kernel feature extraction methods. Linear methods: PCA, MNF, CCA, PLS, OPLS. Kernel methods: KPCA, KMNF, KCCA, KPLS, KOPLS, KECA.

References

- Kernel multivariate analysis framework for supervised subspace learning: A tutorial on linear and kernel multivariate methods. Arenas-Garcia, J., Petersen, K.B., Camps-Valls, G., Hansen, L.K. IEEE Signal Processing Magazine, 30(4):16-29, 2013.

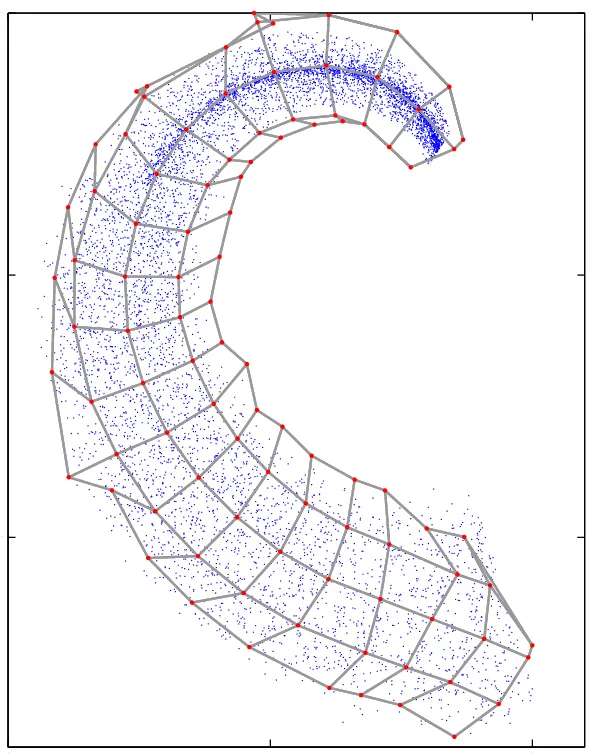

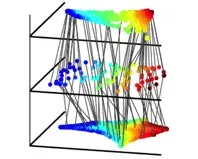

SPCA: Sequential Principal Curves Analysis

SPCA is an invertible manifold learning technique that generalizes PCA by using nonparametric principal curves instead of straight lines. It includes multivariate histogram equalization to fulfill either NonLinear ICA or optimal Vector Quantization.

References

- Nonlinearities and adaptation of color vision from sequential principal curves analysis. Laparra, V., Jiménez, S., Camps-Valls, G., Malo, J. Neural Computation, 24(10):2751-2788, 2012.

SSKPLS: Semisupervised Kernel Partial Least Squares

SSKPLS utilizes probabilistic cluster kernels for nonlinear feature extraction. It builds kernel functions from data, outperforming standard kernel functions and information theoretic kernels like Fisher and mutual information kernels.

References

- Spectral clustering with the probabilistic cluster kernel. Izquierdo-Verdiguier, E., Jenssen, R., Gómez-Chova, L., Camps-Valls, G. Neurocomputing, 149(C):1299-1304, 2015.

- Semisupervised kernel feature extraction for remote sensing image analysis. Izquierdo-Verdiguier, E., Gomez-Chova, L., Bruzzone, L., Camps-Valls, G. IEEE Transactions on Geoscience and Remote Sensing, 52(9):5567-5578, 2014.

SSMA: SemiSupervised Manifold Alignment

The SSMA Toolbox is a MATLAB tool for semisupervised manifold alignment of data without corresponding pairs, requiring only a small set of labeled samples in each domain.

References

- Semisupervised manifold alignment of multimodal remote sensing images. Tuia, D., Volpi, M., Trolliet, M., Camps-Valls, G. IEEE Transactions on Geoscience and Remote Sensing, 52(12):7708-7720, 2014.